Mark Brendanawicz was a character on NBC’s Parks and Recreation for seasons 1 and 2. Before I get into any detail, I wanted to settle something…

What is up with that surname?

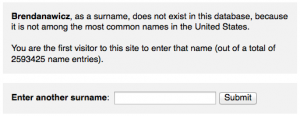

I don’t know a ton about Polish names (Leslie refers to Mark as Polish in season one), but “Brendanawicz” sounds like a made-up name, like someone was making fun of a guy named Brendan at a party, forgot his last name, and ended up referring to him as “Brendan Brendanawicz.” I found a website where you can check whether a name is in the top 150,000 names in the 2000 census. Here’s what I get back on Brendanawicz:

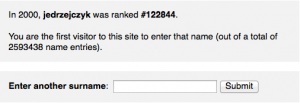

As a point of comparison, I queried the surname of a long lost friend from high school:

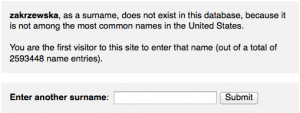

That’s only about 30,000 from the bottom! And now someone I knew at Brandeis:

This is clearly not scientific, nor is it even pretending to be conclusive. Anyway, I guess appearing in the top 150,000 surnames is a bit of a wash. I still think it doesn’t sound real.

The real point of this post

Mark begins the show as kind of a playboy and a bit of an ass. When he begins dating Ann, he starts to act less selfish and actually comes across as a good guy. In fact, he just keeps hitting home runs in the good guy department over the course of season two. Here are some reasons why Mark actually turns out to be a good guy:

- Rather than behaving territorially, he shows compassion toward Andy (Ann’s ex), who still hangs around, expecting Ann to take him back.

- Unlike Andy, Mark understands that Ann is not a prize, but a person. He doesn’t ever try to assert control over her, and he never stoops to the sitcom stereotype.

- Rather than purchasing something useless and typical for Ann’s birthday, he buys her a computer case. She had mentioned many weeks before her birthday that she needed one. He listened to what she was saying, wrote it down, and waited to surprise her with one.

- He makes fun of himself. On Valentine’s day, he dresses up and enacts all of the cliches, giving Ann a Teddy bear, chocolates, roses, etc., because he’s never been in a relationship with someone on Valentine’s day. Ann is not dressed up, she’s hanging out on the couch, and she’s just enjoying his company.

- When Ann fails to introduce Mark as her boyfriend to a childhood friend she’s always had a crush on, Mark doesn’t get jealous, confront her with it, or otherwise act super dramatic. He has ever right to be hurt — it was a glaring omission, and her feelings for this friend are obvious. However, he deals with it with grace, even going so far as to talk to Andy about it.

What I appreciated about Mark was that he was a good guy who, despite never having been in a serious relationship before, knew enough about human interaction to treat Ann like a person, and not just TV Girlfriend Stereotype. They had one of the healthiest, most respectful relationships I’ve ever seen in t.v. Sure, the way the writers began their relationship was kind of shitty, but somehow Ann and Mark worked.

The way they portrayed Mark contrasted with Tom Haverford, who thinks he’s starring in Entourage (a show I’ve never seen), or Ron Swanson, the manliest man or something. Tom hits on women so aggressively, but with such little tact and appeal, that he’s turned himself into a joke (a joke who should also be disciplined for sexual harassment on a regular basis). Yeah, his character is funny, but what he does is NOT OKAY. Ron Swanson is Teddy Roosevelt, constantly performing masculinity. He’s also quite entertaining, but definitely a caricature.

I very much enjoy watching both Tom and Ron, but having Mark balance them out is a rare treat. You just don’t see men behave with such grace, maturity, and self-respect on t.v., without being…well, Captain Adama.

Are all the admirable men on T.V. admirals?