Presentation to the College of Humanities and Fine Arts’ 5 at 4 series

March 9, 2022

Pater, Joe. 2019. Generative linguistics and neural networks at 60: foundation, friction, and fusion. Language 95/1, pp. e41-e74.

Leading questions

How is knowledge of language represented?

How is language learned?

The broader questions of how human knowledge is learned and represented have been given two general kinds of answer in cognitive science since the field emerged in the 1950s.

Birth of cognitive science

1980s: Cognitive science as a field

Cognitive science became a recognized interdisciplinary field in the early 1980s, thanks partly to funding from the Sloan Foundation. Barbara Partee of Linguistics collaborated with Michael Arbib of Computer Science to secure Sloan funding to establish interdisciplinary CogSci at UMass Amherst.

The fight over the English past tense

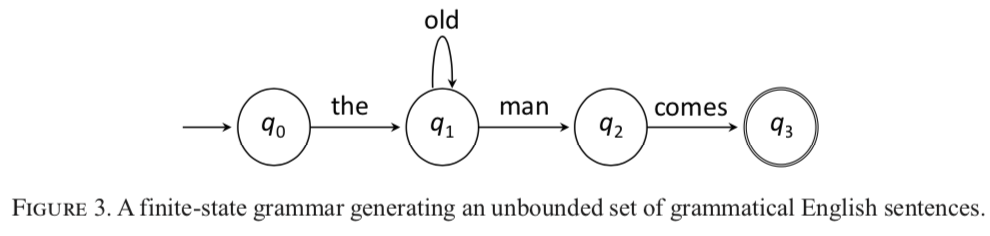

Rumelhart and McClelland (1986) present a Perceptron-based approach to learning and representing knowledge of the English past tense (e.g. love, loved; take, took):

Scholars of language and psycholinguistics have been among the first to stress the importance of rules in describing human behavior…

We suggest that lawful behavior and judgments may be produced by a mechanism in which there is no explicit representation of the rule. Instead, we suggest that the mechanisms that process language and make judgments of grammaticality are constructed in such a way that their performance is characterizable by rules, but that the rules themselves are not written in explicit form anywhere in the mechanism.

Rumlhart and McLellan (1986) On Learning the Past Tenses of English Verbs, pp. 216-217

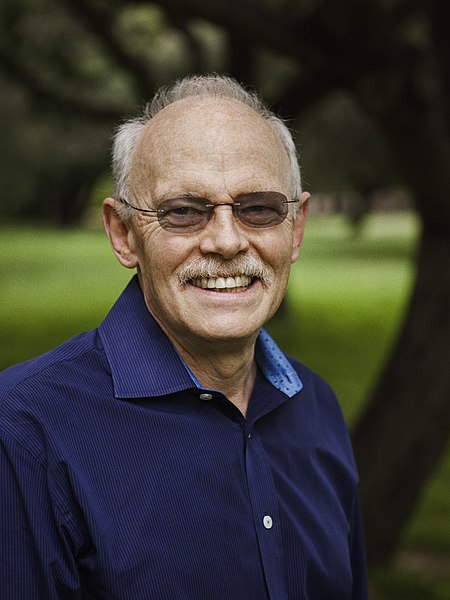

Twenty years ago, I began a collaboration with Alan Prince that has dominated the course of my research ever since. Alan sent me a list of comments on a paper by James McClelland and David Rumelhart. Not only had Alan identified some important flaws in their model, but pinpointed the rationale for the mechanisms that linguists and cognitive scientists had always taken for granted and that McClelland and Rumelhart were challenging — the armamentarium of lexical entries, structured representations, grammatical categories, symbol-manipulating rules, and modular organization that defined the symbol-manipulation approach to language and cognition. By pointing out the work that each of these assumptions did in explaining aspects of a single construction of language — the English past tense — Alan outlined a research program that could test the foundational assumptions of the dominant paradigm in cognitive science.

Steven Pinker (2006) Whatever Happened to the Past Tense Debate https://escholarship.org/uc/item/0xf9q0n8

Fusion: Optimality Theory

Now: Neural networks’ third wave

Modern computers are getting remarkably good at producing and understanding human language. But do they accomplish this in the same way that humans do? To address these questions, the investigators will derive measures of the difficulty of sentence comprehension by computer systems that are based on deep-learning technology, a technology that increasingly powers applications such as automatic translation and speech recognition systems. They will then use eye-tracking technology to compare the difficulty that people experience when reading sentences that are temporarily misleading, such as “the horse raced past the barn fell,” with the difficulty encountered by the deep-learning systems.

From Brian Dillon’s 2020 NSF award abstract https://www.nsf.gov/awardsearch/showAward?AWD_ID=2020914&HistoricalAwards=false

This project draws on the theories and methods of both linguistics and computer science to study the learning of word stress, the pattern of relative prominence of the syllables in a word. The stress systems of the world’s languages are relatively well described, and there are competing linguistic theories of how they are represented. This project applies learning methods from computer science to find new evidence to distinguish the competing linguistic theories. It also examines systems of language representation that have been developed in computer science and have received relatively little attention by linguists (neural networks).

From NSF project summary of “Representing and learning stress: Grammatical constraints and neural networks”, Joe Pater PI, Gaja Jarosz co-PI