View from Dunton Tower at Carleton University looking north along the Rideau River towards the city of Ottawa.

I am very fortunate this year to have received a Fulbright award. The College of Humanities and Fine Arts at UMass made it possible for me to spend the academic year at Carleton University in Ottawa, Ontario, Canada. While here, I’m working on a natural-language parser of Old English, which I will use to create a semantic map of Old English nouns. In short, I want a computer to recognize an Old English noun and then find all words associated with it. Nouns are names for entities in the world. So a semantic map tells us something about how a language permits people to associate qualities with entities.

Following in the footsteps of Artificial Intelligence researchers like Waleed Ammar of the Paul Allen Institute, I will be using untagged corpora—that is, texts that no one has marked up for grammatical information. I would like to interfere with the data as little as possible.

What makes this project different from similar NLP projects is my aim. I want to produce a tool that can be used by literary critics. I am not interested in improving Siri or Alexa or a pop-up advertisement that wants to sell you shoes. Neither is my aim to propose hypotheses about natural languages, which is a general aim of linguistics-related NLPs. So, the object of my inquiry is artful writing, consciously patterned language.

STAGE ONE

The first stage is to write a standard NLP parser using tagged corpora. The standard parser will serve to check any results of the non-standard parser. Thanks to the generosity of Dr. Ondrej Tichý of Charles University in Prague, the standard parser is now equipped with a list of OE lexemes, parsed for form. A second control mechanism is the York-Helsinki Parsed Corpus of Old English, which is a tagged corpus of most of Aelfric’s Catholic sermons.

STAGE TWO

At the same time, I divided the OE corpus into genres. In poetic texts, dragons can breathe fire. But in non-fictional texts, dragons don’t exist. So a semantic field drawn around dragons will change depending on genre. I am subdividing the poetry according to codex, and then according to age (as far as is possible) to account for semantic shift. Those subdivisions will have to be revised, then abandoned as the AI engine gets running. (I’ll be using the python module fastai to implement an AI.)

Notes

Unicode. You’d think that searching a text string for another text string would be straightforward. But nothing is easy! A big part of preparing the Old English Corpus for manipulation is ensuring that the bits and bytes are in the right order. I had a great deal of difficulty opening the Bosworth-Toller structured data. It was in UTF-16 encoding, similar to the character encoding used on Windows machines. When I tried to open it via python, the interpreter threw an error. It turns out, Unicode is far more complex than one imagines. Although I can find ð and þ, for example, I cannot find them as the first characters of words after a newline (or even regex \b). Another hurdle.

Overcame it! The problem was in the data. For reasons unknown to me, Microsoft Windows encodes runic characters differently than expected. So the solution was to use a text editor (BB Edit), go into the data, and replace all original thorns with regular thorns. Same for eth, asc, and so forth. Weirdly, it didn’t look like I was doing anything: both thorns looked identical on the screen.

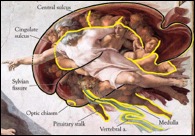

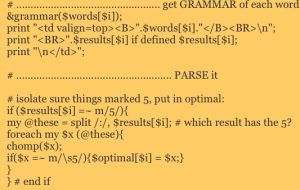

Screen shot of parser guts so far. Markup is data from Tichy’s Bosworth-Toller. Inflect receives Markup, then returns inflections based on the gender of strong nouns. Variants and special cases have not yet been included.

To finish STAGE ONE, I’ll now inflect every noun, pronoun, and adjective, then conjugate every verb as they come in (on the fly). Andrej Tichý at Charles University in Prague, who very generously sent me his structured data, took a slightly different approach: he generated all the permutations first and placed them into a list. Finally, as a sentence comes in, I’ll send off each word to the parser, receive its markup and inflections/conjugations, then search the markup for matches.

Has anyone has done this since Angus Cameron suggested it in 1973? I separated the Corpus of Old English into genres and sub-genres. It enabled me to find words unique to poetry. The poetic texts are largely from the ASPR, but include Chronicle poems, the Meters of Boethius, and others.