Trinity College Library

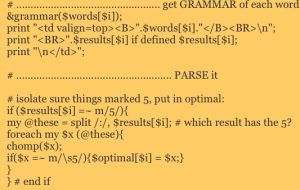

Thanks to the generosity of the College of Humanties and Fine Arts at UMass, the parser is now working at a very basic level. Here it is: http://www.bede.net/misc/dublin/parse.html

Mark Faulkner of Trinity College Dublin aided by the generosity of the Irish Research Council brought me to Trinity in July to describe the parser. He hosted a terrific conference where a wide variety of scholars and scientists presented big-data projects. One conclusion from my perspective was that medieval texts (as well as all ancient languages) are as subject to the Law of Large Numbers as are network packets, social media posts, and traffic patterns. One surprise was how few samples were needed.

Specifically, texts (that is, generically similar sets of syntactically correct utterances) will approach audience expections as defined by genre, language, and period. These expectations are in turn characterized by sets of related vocabulary items. For example, a sermon employs certain words to indicate to an audience that it is indeed a sermon—or conversely, it is by virtue of certain vocabulary items that we classify a text as a sermon. (See Michael Drout, Lexomics.) Moreover, those vocabulary items tend ot come in serially-arranged chunks so that, as an extreme example, an adventure story may use words to describe setting and character before using words that describe dying.

Consequently, a parser that seeks to classify for genre or for aesthetic particulars considers not only vocabulary, but related vocabulary items, and the order in which those items appear over the span of the text. That parser will also consider phrases and phrase structure. Old English poetry, once recognized through its combination of rhetorical tropes (alliteration, hypotaxis, etc.), can be parsed metrically. More importantly, word combinations and clusters can be searched for and a stochastic algorithm applied in order to yield high-frequency clusters. That algorithm is the challenge. Although Google has developed similar algorithms to rank websites and to effect advertiser auctions, deciding on the likelihood of a grammatical claim is a different problem. My challenge over the next year or so is to break down the assumptions a fluent reader of Old English makes when reading a text. The biggest roadblock will be the desire to put in my own grammatical knowledge. A Natural Language Parser does not rely on a grammarian’s parse of a text. It relies instead on all texts ever written in that language.

Consequently, a parser that seeks to classify for genre or for aesthetic particulars considers not only vocabulary, but related vocabulary items, and the order in which those items appear over the span of the text. That parser will also consider phrases and phrase structure. Old English poetry, once recognized through its combination of rhetorical tropes (alliteration, hypotaxis, etc.), can be parsed metrically. More importantly, word combinations and clusters can be searched for and a stochastic algorithm applied in order to yield high-frequency clusters. That algorithm is the challenge. Although Google has developed similar algorithms to rank websites and to effect advertiser auctions, deciding on the likelihood of a grammatical claim is a different problem. My challenge over the next year or so is to break down the assumptions a fluent reader of Old English makes when reading a text. The biggest roadblock will be the desire to put in my own grammatical knowledge. A Natural Language Parser does not rely on a grammarian’s parse of a text. It relies instead on all texts ever written in that language.

The most exciting possibility to my mind is using glosses to tie OE literature into its Latin and Celtic analogues. A gloss is a single-word translation of one language into another. For example, French eau can be glossed by English water. Old English scribes glossed many Latin words. They wrote them in tiny letters above the Latin. Using these glosses, we could connect OE texts to Latin texts more closely and trace the migration and adaptation of images and collocations over time. Paired with information about the movement of manuscripts, we could map the dispersal of ideas, images, and metaphors over time and space.

Because some of these metaphors constitute a defining characteristics of a genre (such as lyric), we can watch the evolution of genre over time. And by examining the structure and constitution of these text in multiple languages, we could observe the interrelation and mutual influence of written culture.

The bottom line? In the next stage, I have to treat a text like an organism. Ask what other organisms are like it. Then try to dissect their DNA and determine which genetic markers came from where.